Minor Software

Minor Software

Project details

Project type:

ECTS:

Time:

Client:

Nature:

Keywords:

Minor

30

2016/2017

TU Delft

Individual & Group

AI, Bayesian network, C#, Evolutionary algorithms, Genetic algorithm, Java, Neural network, Object-oriented programming, Scrum & Unity

Project type:

ECTS:

Time:

Client:

Nature:

Keywords:

Minor

30

2016/2017

TU Delft

Individual & Group Project

AI, Bayesian network, C#, Evolutionary algorithms, Genetic algorithm, Java, Neural network, Object-oriented programming, Scrum & Unity

Summary

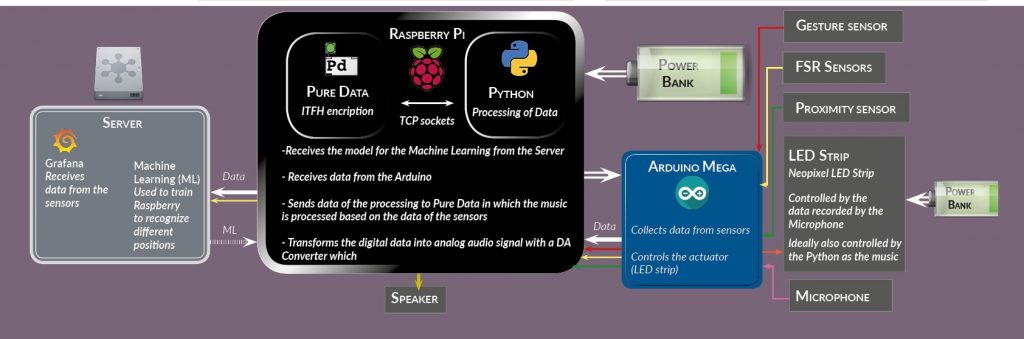

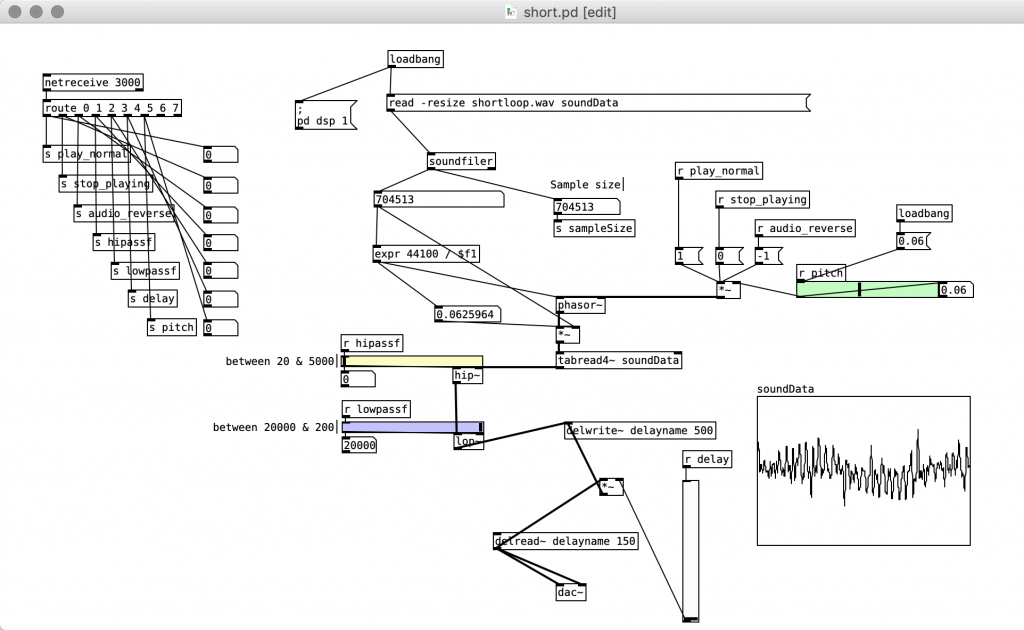

During my bachelors, I did my minor in software at the TU Delft. During this, I followed several courses in computer science and did a project within the minor where we had to apply our knowledge and create a game in Unity. During this time I learned how to program in Java and C# using the Object-Oriented programming paradigm. I also took a course on computational intelligence where I learned the basic of artificial intelligence, Bayesian networks, evolutionary algorithms and neural network. During the game project, I led our multidisciplinary team and learned how to work using Scrum.

Courses

During my minor, I took several computer science courses. During my course in object-oriented programming (OOP), I learned how to program in Java and how to program in an object-oriented way. During my course on computational intelligence, I learned the foundation of AI in general and we learned how to apply three types of AI, Bayesian networks, evolutionary algorithms and neural networks. The neural networks were created inside of MATLAB and compared to the build-in tool in MATLAB. The evolutionary algorithms were programmed using Java.

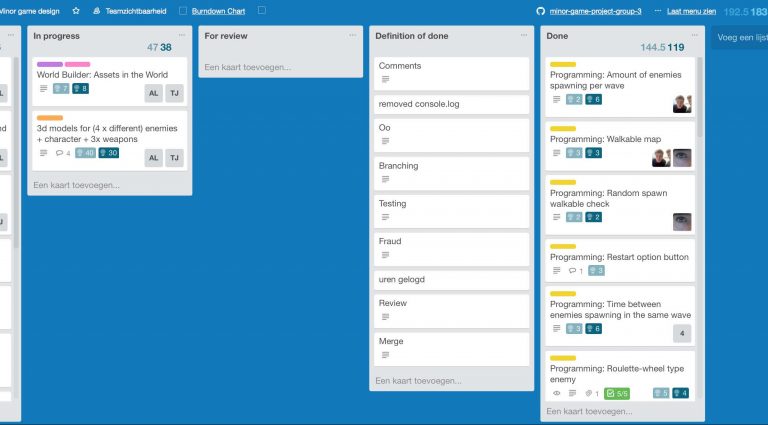

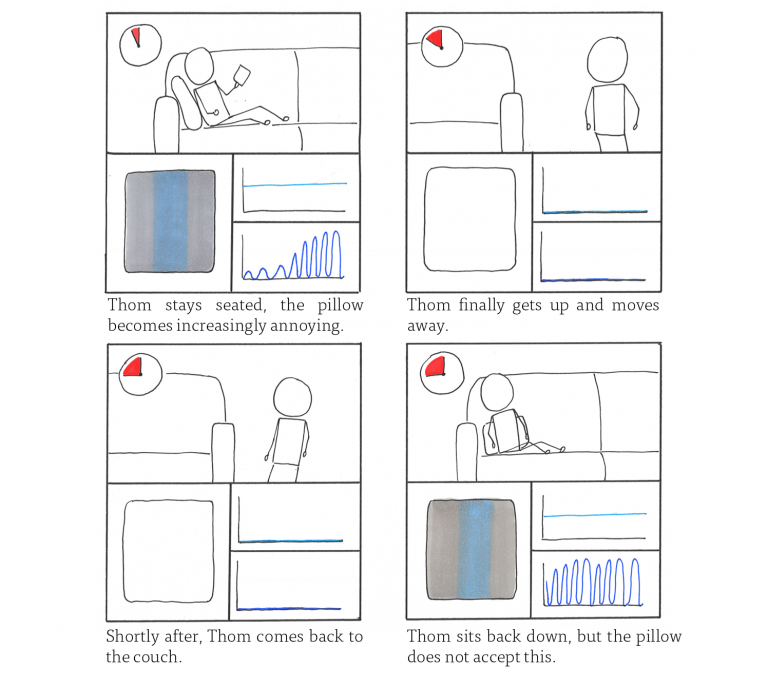

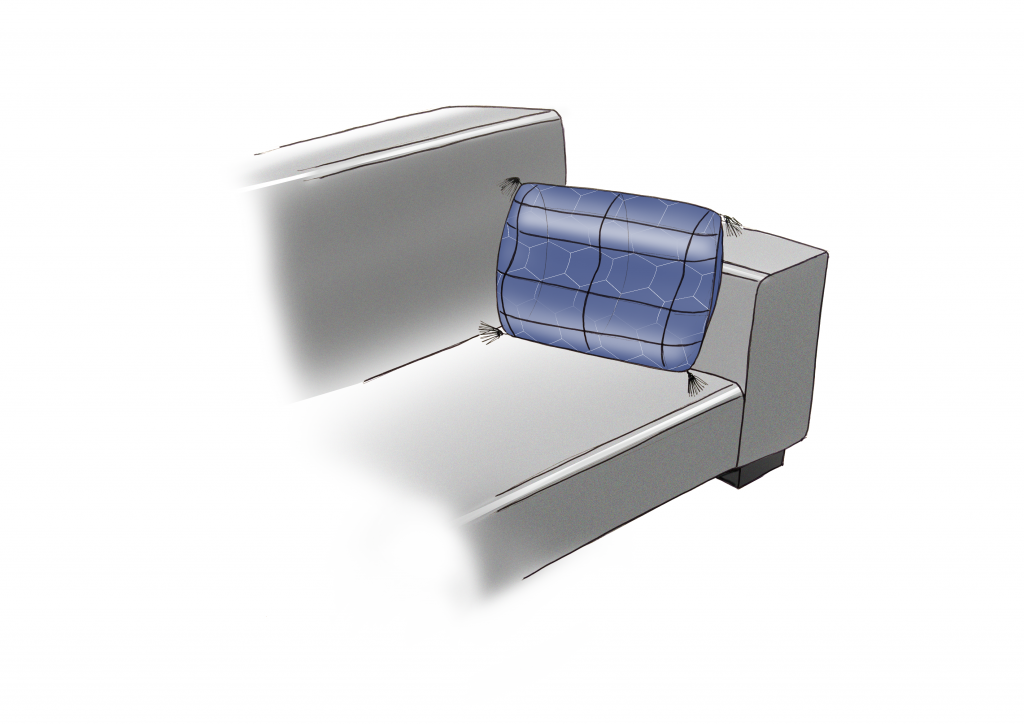

Game Project

During the second half of the minor, we had a project where we had to apply our knowledge by creating a video game in Unity. This was a group project consisting of 6 members from the minor, each from a different study of the TU delft. During this project, my main responsibility was to lead the group in successfully creating a game. To do so we utilised Scrum and I learned how to be the scrum master. Next to being the scrum master, I helped out with the game design, game UI and game Graphics. I also programmed an evolutionary algorithm into our game which made created a dynamic difficulty with the enemies adapting to the playstyle of the player.